The Transmission Control Protocol (TCP) is used to provide reliable, in-order delivery of messages over a network.

Reasons for TCP

The Internet Protocol (IP) works by exchanging groups of information called packets. Packets are short sequences of bytes consisting of a header and a body. The header describes the packet’s destination, which routers on the Internet use to pass the packet along, in generally the right direction, until it arrives at its final destination. The body contains the application data.

In cases of congestion, the IP can discard packets, and, for efficiency reasons, two consecutive packets on the internet can take different routes to the destination. Then, the packets can arrive at the destination in the wrong order.

The TCP software libraries use the IP and provides a simpler interface to applications by hiding most of the underlying packet structures, rearranging out-of-order packets, minimizing network congestion, and re-transmitting discarded packets. Thus, TCP very significantly simplifies the task of writing network applications.

Source: http://en.wikipedia.org/wiki/Transmission_Control_Protocol

Applicability of TCP

TCP is used extensively by many of the Internet’s most popular application protocols and resulting applications, including the World Wide Web, E-mail, File Transfer Protocol, Secure Shell, and some streaming media applications.However, because TCP is optimized for accurate delivery rather than timely delivery, TCP sometimes incurs long delays while waiting for out-of-order messages or retransmissions of lost messages, and it is not particularly suitable for real-time applications such as Voice over IP. For such applications, protocols like the Real-time Transport Protocol (RTP) running over the User Datagram Protocol (UDP) are usually recommended instead.

Source: http://en.wikipedia.org/wiki/Transmission_Control_Protocol

Using TCP

Using TCP, applications on networked hosts can create connections to one another, over which they can exchange streams of data using stream sockets. TCP also distinguishes data for multiple connections by concurrent applications (e.g., Web server and e-mail server) running on the same host.

In the Internet protocol suite, TCP is the intermediate layer between the Internet Protocol (IP) below it, and an application above it. Applications often need reliable pipe-like connections to each other, whereas the Internet Protocol does not provide such streams, but rather only best effort delivery (i.e., unreliable packets). TCP does the task of the transport layer in the simplified OSI model of computer networks. The other main transport-level Internet protocols are UDP and SCTP.

Applications send streams of octets (8-bit bytes) to TCP for delivery through the network, and TCP divides the byte stream into appropriately sized segments (usually delineated by the maximum transmission unit (MTU) size of the data link layer of the network to which the computer is attached). TCP then passes the resulting packets to the Internet Protocol, for delivery through a network to the TCP module of the entity at the other end. TCP checks to make sure that no packets are lost by giving each packet a sequence number, which is also used to make sure that the data is delivered to the entity at the other end in the correct order. The TCP module at the far end sends back an acknowledgment for packets which have been successfully received; a timer at the sending TCP will cause a timeout if an acknowledgment is not received within a reasonable round-trip time (or RTT), and the (presumably) lost data will then be re-transmitted. The TCP checks that no bytes are corrupted by using a checksum; one is computed at the sender for each block of data before it is sent, and checked at the receiver.

Source: http://en.wikipedia.org/wiki/Transmission_Control_Protocol

Services Provided by TCP

TCP provides more services than UDP because it is connection oriented whereas UDP is not. TCP allows for point-to-point communication, adressing, multiplexing, demultiplexing, reliability, full-duplex communication, flow control and congestion control.

The reason the whole internet can actually even work is in part thanks to the design of TCP which can dynamically adapt to different properties such as bandwidth, delay and packet loss as well as recover from errors.

TCP splits user data into pieces no larger than 64K bytes and then sends each of these pieces as separate IP datagrams. When these IP datagrams are received at the destination, TCP reconstructs the original byte stream. In order to establish the connection between the sender and destination, sockets are used. Sockets are really just the combination of an IP address and a port number.

A connection is established between two computers using what is called a “three-way handshake”.

The originator (you, hopefully) sends an initial packet called a “SYN” to establish communication and “synchronize” sequence numbers in counting bytes of data which will be exchanged. The destination then sends a “SYN/ACK” which again “synchronizes” his byte count with the originator and acknowledges the initial packet. The originator then returns an “ACK” which acknowledges the packet the destination just sent him. The connection is now “OPEN” and ongoing communication between the originator and the destination are permitted until one of them issues a “FIN” packet, or a “RST” packet, or the connection times out. All the protocols of the Internet which need “connections” are built on the TCP protocol. The “three way handshake” establishes the communication. Much like you picking up your phone, getting a dial tone, dialing the number, hearing ringing, and then the other party saying “hello” or “mushi mushi.”

TCP also closes a connection gracefully. Upon closing, the last data segment is followed by a FIN which also has a sequence number associated with it. This way, if the FIN arrives before all segments are received, it will wait for the expected data before closing.

The biggest source of errors in message transmission come from segments damaged in transmission or segments which entirely fail to arrive. The sender obviously does not know if the message sent has been received at the destination or not so it is necessary in TCP for the receiver to acknowledge receipt. In UDP, since reliability is not a concern and since it is a connectionless protocol, this acknowledgment does not occur.

Of course, we need to switch our point of view as soon as the destination sends an acknowledgment because at this point the receiver is acting as a sender. It is therefore entirely possible that the acknowledgment message gets lost or damaged in transmission as well. Therefore, it is necessary to have timers on both the sender and receiver which trigger a re-send after a certain amount of time to make sure the application does not end up in a dead-lock state where both sides are waiting to receive something from the other.

What is the value of the timer? It is possible to have a fixed timer or to use an adaptive scheme. Depending on the network, different value will be more appropriate for different situations and even for a given network, the perfect value can change depending on the amount of traffic on the network. If the timer value is set to be too short, re-transmission will clog the network causing further delay and therefore leading to even more ACK’s being sent! This can therefore become a rather messy situation. Of course, if the timer is set too high, performance will suffer because the delay before re-transmission leads to the application sitting and waiting. Therefore, the ideal timer will have some functionality to be aware of network conditions and then use this information in order to dynamically increase or decrease the timer.

Another problem thatmust be dealt with arises from the fact that TCP re-transmits messages as stated above in order to guarantee transmission. Since messages and ACK’s may be re-transmitted, we suddenly end up in a situation where it is possible for the destination to receive duplicates. For example, imagine that the receiver sends an ACK in order to confirm receipt of a message but high network traffic causes this ACK to travel slowly. Meanwhile the sender is waiting for the ACK when suddenly it’s timer expires and it therefore decides to re-transmit the message. Right after sending the message the ACK might finally arrive, however the duplicate has already been sent! The sender will now not send the message a third time because the ACK was received but the receiver will need to deal with the duplicate message that will soon be arriving! In order to deal with duplicates, the receiver can use the sequence number to determine if the message has already been received. However, for this to work correctly we are assuming that the sequence number space is large enough that it does not restart within the life-span of a packet. In a nutshell, the sequence space is a fixed number. When a connection is established the first message always starts at the first sequence number and eventually, if the connections has been open long enough and many messages have been sent, eventually the last available sequence number is reached. At this point, the next message sent will start back at the first sequence number. Therefore, it is possible for two messages with the same sequence number to exist and not be duplicates!

Congestion is caused when too much traffic is flowing through a network that individual messages are delayed because messages are arriving more quickly than can be processed. When this occurs, routers will begin dropping packets, starting with those it feels are of a lower priority. Therefore, as congestion occurs, time-outs start to happen causing messages to be re-transmitted. This of course is done in order to make sure the message does in fact arrive, but for a system experiencing congestion, this reliability feature imply contributes to the congestion. TCP congestion control handles this by estimating the round trip delay by observing patterns of delay and then setting the timer so a value greater than the time estimated. The timer is set higher in order to account for round trip time variance.

RTO – Re-transmission timeout

As discussed above, a timer is set on both the sender and receiver upon transmitting a message. If an acknowledgment is not received before this timer expires then a time-out occurs which triggers the re-transmission of the message. Using a fixed timer is not a good idea because the main cause for such occurring is congestion. If congestion occurs on your network and you message times-out, if you re-transmit the same message and keep the timer at the same value, chances are the message will time-out yet again if network conditions have not improved. Therefore, if we assume congestion is the culprit, it is important to give the message some extra time upon re-transmission in order to see if this extra time is enough for it to reach the destination and be acknowledged. The act of increasing the timer each time a message is re-transmitted is referred to as re-transmission timeout backoff. The most common type of RTO backoff is binary exponential backoff which takes the current timer value and multiplies it by two each time a message is re-transmitted.

Another issue that is important to consider is with respect to shrinking the timer back down. Re-transmitted messages should never be used as a guage when measuring RTT (round trip time) because the acknowledgment received could actually be for the first message transmitted and not for the re-transmitted one! Therefore, the RTO backoff should continue to be used until an ACK arrives for a message that has not been re-transmitted. At this point, it is up to the designer to decide how to implement the system. The timer can be left constant for a given amount of time and then upon receiving a certain number of messages without needing to re-transmit, the timer value can be divided by two in order to shrink it back down, meaning that perhaps network conditions have improved.

Window Management

Slow-start is part of the congestion control used in TCP in order to avoid sending more data than the network is able to handle.

Slow-Start Algorithm

A slow-start algorithm consists of two distinct phases: the exponential growth phase, and the linear growth phase.

During the exponential growth phase, Slow-start works by increasing the TCP congestion window each time the acknowledgment is received. It increases the window size by number of segments acknowledged. This happens until either an acknowledgment is not received for some segment or a predetermined threshold value is reached. If a loss event occurs, TCP assumes this it is due to network congestion and takes steps to reduce the offered load on the network. Once a loss event has occurred or the threshold has been reached, TCP enters the linear growth (congestion avoidance) phase. At this point, the window is increased by 1 segment for each RTT. This happens until a loss event occurs.

Although the strategy is referred to as “slow-start”, its congestion window growth is quite aggressive.

The algorithm begins in the exponential growth phase initially with a congestion window size (cwnd) of 1 or 2 segments and increases it by 1 Segment Size (SS) for each ACK received. This behavior effectively doubles the window size each round trip of the network. This behavior continues until the cwnd reaches the size of the receivers advertised window or until a loss occurs.

When a loss occurs half of the current cwnd is saved as a Slow Start Threshold (SSThresh) and slow start begins again from its initial cwnd. Once the cwnd reaches the SSThresh TCP goes into congestion avoidance mode where each ACK increases the cwnd by SS*SS/cwnd. This results in a linear increase of the cwnd.

TCP Data Transfer

When a TCP connection is established, a stream of bytes is what will actually be sent between communicating entities, not messages. Messages themselves can be sent by any combination of pieces which give a total of 2048 bytes. Therefore, is you wish to send 4 messages each of which are 512 bytes, TCP might not actually send your message right away if it is set to wait for a total of 2048 bytes before transmitting. If this is the case, it will not send your 512 byte messages until 4 of these such messages are ready to be sent. TCP could also instead be set to send 2 pieces each of size 1024 bytes, and therefore only 2 messages need be ready in order to be transmitted. The important thing to understand is that a message being ready to be sent does not mean that this message itself is sent, but rather pieces of bytes which are then re-combined in order to re-create the message. Also, its important to note that TCP may decide to wait with your message and collect more data before transmitting. Certain flags can be used in order to request no delay.

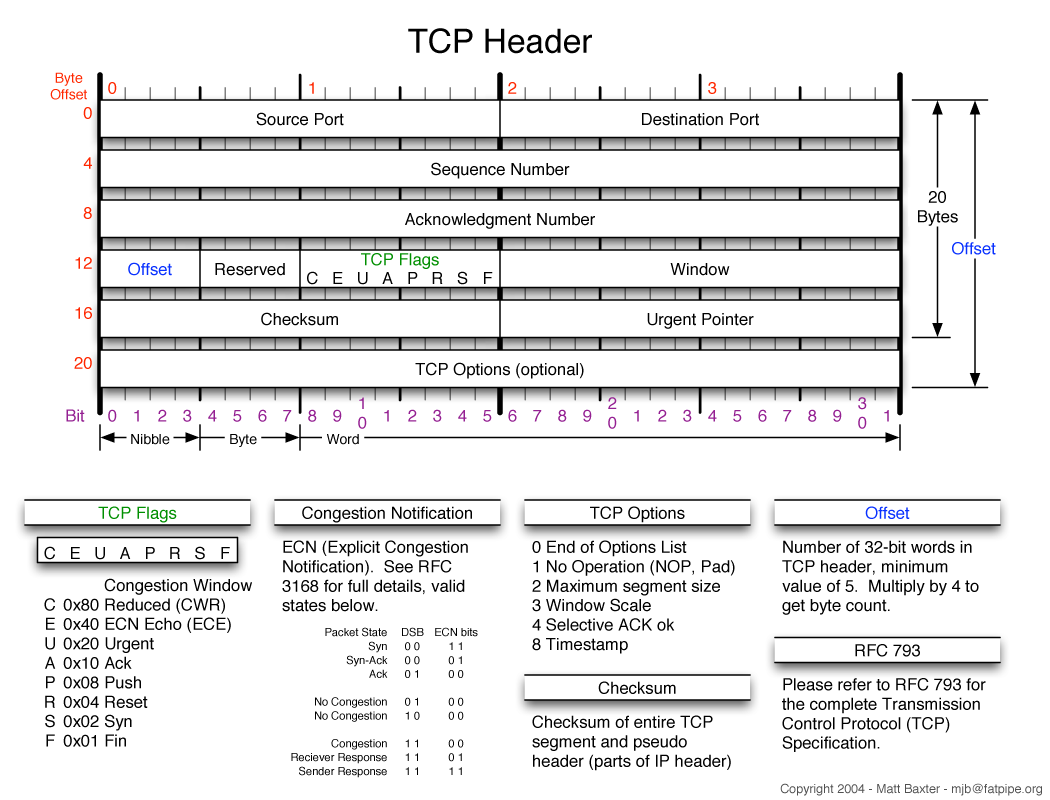

TCP Header

Source: http://www.visi.com/~mjb/Drawings/

TCP Header Fields

Source and destination port – 16 bit address corresponding to the port which is associated with the value defined in the socket used to establish the connection.

Sequence and acknowledgment numbers – These are both 32 bit numbers which are used to help ensure reliable delivery of messages. Every TCP packet send has a sequence number associated with it and the acknowledgment is used to communicate to the other entity which sequence number is expected next.

URG – this will be set to 1 if the urgent pointer is used. The pointer will indicate an offset from the current sequence number which informs the receiver at which point the urgent data ends.

ACK – set to 1 to indicate the acknowledgment is valid.

PSH – set to 1 to indicate pushed data. Pushed data is data that the sender force-delivered without waiting for the buffer to fill.

RST – set to 1 to indicate a reset. This can be used to reject a connection or to refuse an invalid segment. It can also be used if the host has become confused for whatever reason and therefore would like to re-establish the connection.

SYN – used in order to establish a connection. If SYN = 1 an ACK = 0 then a connection request is in progress. If SYN = 1 and ACK = 1 then a connection acceptance is in progress.

FIN – this is set to 1 in order to indicate the end of user data and to therefore close the connection. As discussed earlier, data may still be received after the FIN because the FIN also has a sequence number associated with it and it is possible that messages with an earlier sequence number arrive after the FIN which should actually be processed and which are.

TCP Error Checking

Error checking is accomplished by using the checksum field available in the TCP header. The receiver performs a calculation on the checksum field and the user data and if the result is not 0, this indicates that there is an error somewhere.

TCP Options

The options field available in the TCP header allows for extra features to be used or certain settings to be set. Below are some example:

– During connection, this field is used for connecting entities to negotiate an acceptable maximum segment size.

– Since network speeds have increased dramatically since TCP was first introduced, 16 bits used for sequence numbers is actually no longer enough and the problem of incorrect duplicate detection becomes a major concern. It is therefor possible to also use the options field to allow for an increase in the number of available sequence numbers from 2^16 to 2^32.

– The options field can also be used to include a time-stamp which can then be used in order to determine round trip time, information that can then be used for congestion control.

Additional Time Issues

Given all of the information presented above, there are some other issues to consider with respect to the timer. As mentioned a persistence timer is essential in order to avoid deadlock situations. This timer will expire and cause the last message to be re-transmitted if no acknowledgment is received in a timely fashion. When using congestion control, it is possible for the available window size to go down to 0, which causes the sender to stop sending messages temporarily until it is notified that there is space again in the window. Therefore, even in this situation it is still important to occasionally have a timer expire causing the sender to ask the receiver if it has space in its window. This is necessary because imagine if an acknowledgment sent increasing the window size is lost, the system would be stuck waiting forever!

Of course, the above assumes that the problem is due to congestion. It is possible that there is no connection but that the entity you are communicating with is no longer available or has gone offline. Is is therefore also important not to continue trying to send messages to this user forever, but to instead also maintain a keep-alive timer which will occasionally cause a message to be sent to the receiver asking, “are you still there?”. If no response is received, the connection should be terminated so resources are not wasted sending messages to an entity which is no longer available to receive these messages.